Natural Language Processing (NLP) is a field of computer science that focuses on enabling computers to understand, interpret, and generate human language. It is a critical area of research that has the potential to revolutionize the way we interact with technology. Deep Learning, a subfield of Machine Learning, has been instrumental in driving progress in NLP. By utilizing Deep Learning techniques, NLP has made significant advancements in areas such as Sentiment Analysis, Machine Translation, and Named Entity Recognition, to name a few.

In this article, we will explore the fundamentals of NLP with Deep Learning, understand the components that make up NLP, and its applications. We will also discuss the challenges that come with the field and the future directions of NLP. By the end of this article, you will have a comprehensive understanding of the current state of NLP with Deep Learning and the potential it holds for the future.

Natural Language Processing

Natural Language Processing (NLP) is a subfield of computer science, artificial intelligence, and computational linguistics that deals with the interaction between computers and humans in natural language. In other words, it is a way for computers to process, understand, and generate human language, which is often ambiguous and complex. NLP involves programming machines to recognize human speech, analyze the text, and extract relevant information to perform specific tasks.

NLP techniques are widely used in various applications, including chatbots, voice assistants, automated translation, sentiment analysis, and text summarization. The technology is used to automate routine tasks such as customer service inquiries, email sorting, and even legal discovery.

NLP has several challenges due to the complexities of human language, including ambiguity, idiomatic expressions, and cultural references. However, with the advancements in Machine Learning, particularly Deep Learning, NLP has made significant progress in recent years, allowing for more accurate and effective language processing.

The role of Deep Learning in NLP

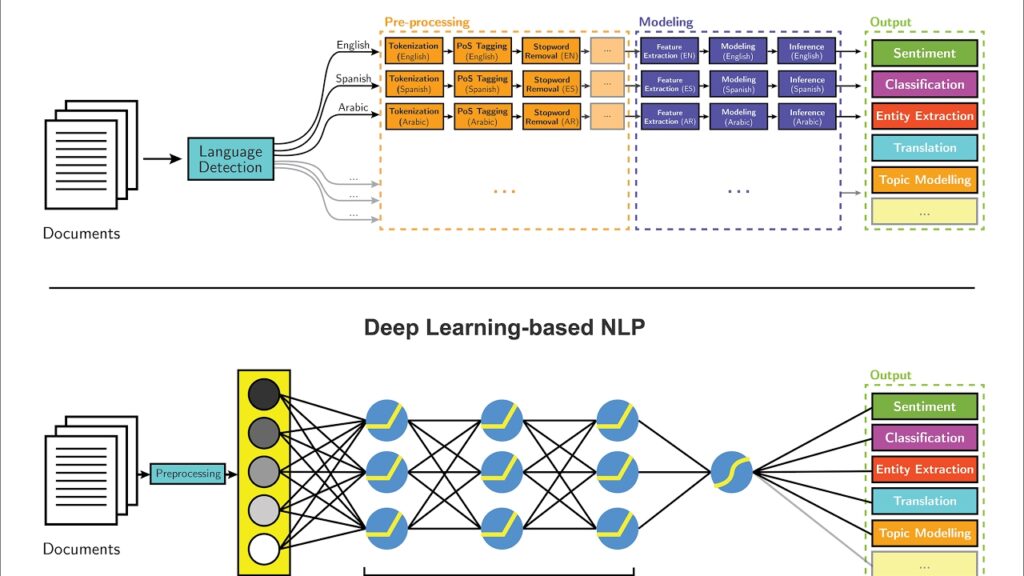

Deep Learning has played a significant role in driving progress in Natural Language Processing (NLP). Deep Learning algorithms, such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Transformers, have significantly improved the accuracy of NLP tasks.

One of the main advantages of Deep Learning is its ability to learn representations of the data automatically. This is particularly useful in NLP, where the input data is often complex and unstructured. Deep Learning models can learn meaningful representations of the input data, such as word embeddings, that capture the semantic meaning of the words in the text.

Another advantage of Deep Learning is its ability to model complex relationships between the input and output data. This is important in NLP, where the meaning of a sentence often depends on the context and relationships between the words. Deep Learning models such as RNNs and Transformers can capture the dependencies between the words in a sentence and use this information to make accurate predictions.

Deep Learning has also enabled the development of end-to-end NLP systems, where the entire pipeline, from preprocessing to output, can be learned from data. This has eliminated the need for manual feature engineering and has significantly simplified the development of NLP systems.

Overall, Deep Learning has played a critical role in advancing the state-of-the-art in NLP. By leveraging the power of neural networks, Deep Learning has enabled more accurate and effective language processing, opening up new possibilities for NLP applications across industries.

Understanding the basics of Deep Learning

Deep Learning is a subset of machine learning that involves training artificial neural networks to learn from data. The term “deep” refers to the fact that these neural networks have multiple layers, allowing them to learn increasingly complex representations of the data. The goal of Deep Learning is to create algorithms that can automatically learn and improve over time, without the need for explicit programming.

Artificial neural networks are the building blocks of Deep Learning. They are composed of interconnected nodes, or neurons, that process and transmit information. Each neuron receives input from multiple sources, performs a computation, and produces an output that is passed to other neurons. By stacking multiple layers of neurons together, neural networks can learn increasingly complex representations of the data.

Training a neural network involves optimizing a set of parameters, or weights, that control how the network processes the data. During training, the network is fed a set of labeled examples, and the weights are adjusted to minimize the difference between the predicted output and the actual output. This process is called backpropagation and is a form of supervised learning.

One of the main advantages of Deep Learning is its ability to automatically learn features from the data. In traditional Machine Learning, feature engineering is a crucial step in the process, requiring domain expertise and significant effort. Deep Learning eliminates the need for manual feature engineering by allowing the neural network to learn the most relevant features automatically.

In summary, Deep Learning is a subset of machine learning that uses artificial neural networks with multiple layers to learn from data. The process involves training the network to optimize a set of weights that control how the network processes the data. The main advantage of Deep Learning is its ability to automatically learn features from the data, eliminating the need for manual feature engineering.

The Components of Natural Language Processing

The field of Natural Language Processing (NLP) involves various components that work together to enable computers to process and understand human language. These components include:

- Preprocessing: This involves preparing the text data for analysis by removing unwanted elements such as punctuation, numbers, and stop words. It also includes techniques such as tokenization, which involves breaking down the text into individual words or tokens, and stemming or lemmatization, which involves reducing words to their base form.

- Word Embeddings: These are mathematical representations of words that capture their semantic meaning. Word Embeddings are created using techniques such as Word2Vec and GloVe, which learn the relationships between words by analyzing large amounts of text data.

- Sequence Models: These are neural networks that can process sequences of data, such as words in a sentence. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks are commonly used sequence models in NLP. These models can capture the context and dependencies between words in a sentence.

- Attention Mechanisms: These are components added to neural networks that allow the model to focus on specific parts of the input data. Attention mechanisms are used to improve the performance of sequence models by allowing the model to selectively attend to relevant parts of the input sequence.

These components work together to enable various NLP applications such as Sentiment Analysis, Machine Translation, Named Entity Recognition, Question Answering, and Text Summarization. By combining these components and leveraging Deep Learning techniques, NLP has made significant progress in recent years, allowing for more accurate and effective language processing.

Applications of NLP with Deep Learning

NLP with Deep Learning has various applications across industries. Here are a few examples:

- Sentiment Analysis: This involves analyzing text data to determine the sentiment or opinion expressed. Deep Learning techniques such as Convolutional Neural Networks (CNNs) and LSTMs can be used to perform sentiment analysis with high accuracy. Applications of sentiment analysis include analyzing customer feedback, social media monitoring, and predicting stock prices.

- Machine Translation: This involves automatically translating text from one language to another. Deep Learning models such as the Transformer architecture have significantly improved the quality of machine translation. Applications of machine translation include translating web pages, product descriptions, and legal documents.

- Named Entity Recognition: This involves identifying and extracting entities such as names, organizations, and locations from text data. Deep Learning models such as BiLSTMs can be used to perform Named Entity Recognition with high accuracy. Applications of Named Entity Recognition include analyzing news articles and social media posts.

- Question Answering: This involves answering questions posed in natural language. Deep Learning models such as the BERT architecture have achieved state-of-the-art performance on question answering tasks. Applications of question answering include virtual assistants and customer support chatbots.

- Text Summarization: This involves automatically generating a summary of a longer piece of text. Deep Learning models such as the Encoder-Decoder architecture have been used to generate summaries with high accuracy. Applications of text summarization include summarizing news articles, scientific papers, and legal documents.

Overall, NLP with Deep Learning has numerous applications across industries and has the potential to revolutionize the way we interact with technology. By enabling computers to process and understand human language more effectively, NLP with Deep Learning can help organizations make better decisions and improve the user experience of various applications.

Challenges and Future Directions

While Deep Learning has made significant progress in NLP, there are still several challenges that need to be addressed. Here are a few:

- Bias: NLP models can be biased towards certain groups or individuals, leading to unfair or inaccurate predictions. Addressing bias in NLP models is a critical challenge that requires careful consideration of the training data and model architecture.

- Explainability: Deep Learning models are often considered “black boxes” because it can be challenging to understand how they arrive at their predictions. This lack of transparency can be a significant barrier to adoption in certain applications, such as healthcare and finance. Developing methods to explain the predictions of Deep Learning models is an active area of research.

- Multilinguality: NLP models often perform well on English text, but can struggle with other languages. Developing NLP models that can handle multiple languages is an essential challenge for the field.

The future of NLP with Deep Learning is promising. Here are a few directions that the field is likely to move towards:

- Multimodal Learning: Combining text, speech, and visual information can improve the accuracy of NLP models. Developing Deep Learning models that can learn from multiple modalities is an active area of research.

- Few-shot Learning: NLP models often require large amounts of labeled data to achieve high accuracy. Few-shot learning is a technique that allows models to learn from a few examples, which can reduce the amount of labeled data required.

- Transfer Learning: Transfer Learning involves training a model on a large dataset and then fine-tuning it on a smaller dataset for a specific task. This technique can improve the accuracy of NLP models with limited labeled data.

Overall, the challenges and future directions of NLP with Deep Learning are exciting, and the field is poised to make significant advancements in the years to come.

Further readings

If you’re interested in further reading on Natural Language Processing (NLP) with Deep Learning, here are a few resources to check out:

- “Natural Language Processing with Python” by Steven Bird, Ewan Klein, and Edward Loper. This book provides a comprehensive introduction to NLP with Python, including Deep Learning techniques.

- “Speech and Language Processing” by Daniel Jurafsky and James H. Martin. This textbook covers various NLP techniques, including Deep Learning, and provides hands-on examples using Python.

- “Deep Learning for NLP” course on Coursera by the National Research University Higher School of Economics. This course covers the basics of Deep Learning and its applications in NLP.

- “The NLP Newsletter” by Sebastian Ruder. This newsletter provides updates on the latest research in NLP, including Deep Learning techniques.

- “The Illustrated Transformer” by Jay Alammar. This blog post provides an excellent visual explanation of the Transformer architecture, which is a key Deep Learning model for NLP tasks such as machine translation.

- “Efficient Estimation of Word Representations in Vector Space” by Tomas Mikolov et al. This seminal paper introduced the Word2Vec algorithm, which is widely used for creating word embeddings in NLP.

These resources provide a good starting point for exploring NLP with Deep Learning in more detail.